How I Built High-Performance Container Alerts for Serversinc

A comprehensive guide to building truly global websites with Scalar's localization features and best practices.

Application Alerts keep you informed when a container running your Application starts or exits. At its core, it’s a simple but powerful uptime monitoring feature built into Serversinc. These alerts are especially important for production apps, helping you stay aware of what’s happening on your servers in real time.

For example, if you deploy a new version of your app and the container exits unexpectedly, that’s a strong signal something went wrong. Alerts help you catch these issues early, before your users do.

Observers

To start building the Alerts feature, I needed a way to check whether the state of a Container had changed from what’s stored in the database. For example, if the status of a container in the database is “running”, I need to detect if, and when, it changes to “exited”.

One way to track this kind of change is to set up an Observer on the Container model that logs the container ID and status whenever a container is created or updated. Observers are a great way to hook into model events and track changes automatically.

The only issue with using the Observer pattern in this case is that the Heartbeat Service doesn’t use the Container model to write updates. Instead, it performs bulk inserts directly into the database. Because of this, the observer isn’t triggered, and I don’t receive any updates.

The Heartbeat Service

To keep a long story short (ish), parts of the Heartbeat Service were rewritten to favour using the Container model over writing directly to the database. Originally, every container in the heartbeat’s payload was being written straight to the database. As the number of servers and containers grew, so did the number of updateOrCreate queries.

This works fine until it doesn’t. So a new flow was devised:

Note: I use pContainer to refer to the JSON container object from the heartbeat, and dbContainer for the one stored in Serversinc’s database.

- When a heartbeat is received, I fetch the server’s

dbContainers. - Then, looping through each

pContainer, I find the correspondingdbContainerand update its status if it has changed. - If I see a

pContainerthat doesn’t match any existingdbContainer, I create a newdbContainerwith the correct information. This ensures the dashboard stays up to date. - Finally, if any existing

dbContainerisn’t present in thepContainersarray, I remove it from the database.

This method significantly reduces the number of writes to the database. For example, let’s say only 3 out of 10 containers have had a state change. Previously, I’d write all 10 to the database like this:

10 containers = 10 writes (multiple columns written, even if none of them changed)Now, with the new flow:

10 containers = 3 writes (only 1 column changed per container)A considerable reduction in the number of DB writes, and only two reads: One for Server, One for all it’s Containers.

Back to the Alerts

Now that the service was using the Container model, the Observer began writing changes to the database whenever the status column changed. That meant I could finally start building the AlertService.

But first, I needed to define the Alert models and API endpoints. The biggest concern at this stage was avoiding duplication. I already had NotificationChannels in place that listened for events and sent messages to your preferred platforms. I didn’t want to create a completely separate notifications system just for Alerts.

After thinking it through, I landed on a clean separation of responsibilities: Alerts would be responsible for what to watch, and Notification Channels would continue handling how to notify you.

So I started modeling the Alert system and came up with this structure:

Alerts aren’t traditional “alerts” in themselves, they’re more like conditions to watch for that could trigger an alert. In the image above, each alert has a key, operator, and value.

The AlertService uses these to perform a check: compare the key against the value based on the operator. For example:

Check if (key)"status" (operator)"is equal to" (value)"exited"If the check is true as in the case above, the AlertService triggers a “application.offline” event that’s then distributed to Discord, Slack etc

I also have a Resource Type and Resource ID, in Laravel these are polymorphic relationships that allow us to relate different models together. So in the alert above, calling resource() can either be an Application or a Server.

Triggering the Alerts

The Alerts service was the straightforward part. It runs every minute and does the following:

- Finds any new rows in the

container_historiestable (created more than 1 minute ago) - For each, finds any alerts defined for the container’s parent Application

- If any alerts match, it triggers the appropriate events

It’s a simple service that gets the job done, and just like that I have alerts up and running for Applications.

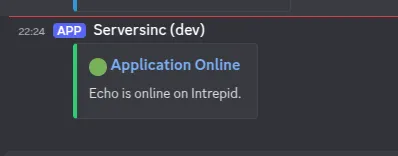

There were a few minor changes made to the Notifications job as well, mainly to improve the context of the messages being sent. Now, each message includes the Application name, the server it’s running on, and a clickable title that links directly to the container in the dashboard.

Future Improvements / Notes

Because both the Heartbeats and the AlertService run on one-minute schedules, there can be up to a two-minute delay between a container changing state and a notification being delivered.

There’s a two-part solution to this that will touch different parts of the system, but I’ll cover that when the time comes.

That’s it for this devlog, thanks for reading!

Wrap-up

Deploying apps shouldn’t be complicated or expensive. Serversinc gives you the features of a managed hosting platform while keeping full control of your own servers.

If that’s the way you want to ship projects, create a free Serversinc account, try a 14-day free trial .